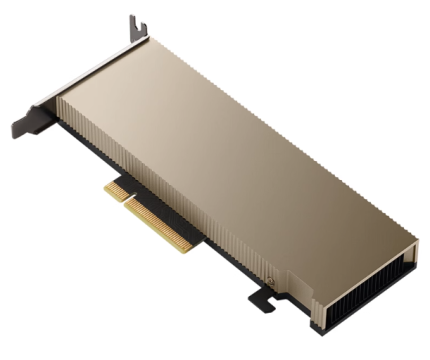

NVIDIA Tesla V100 32GB GDDR5 PCI Express Graphics Card 900-2G500-0010-000

| General Information | Manufacturer | NVIDIA Corporation |

| Manufacturer Part Number | 900-2G500-0010-000 | |

| Manufacturer Website Address | http://www.nvidia.com | |

| Brand Name | NVIDIA | |

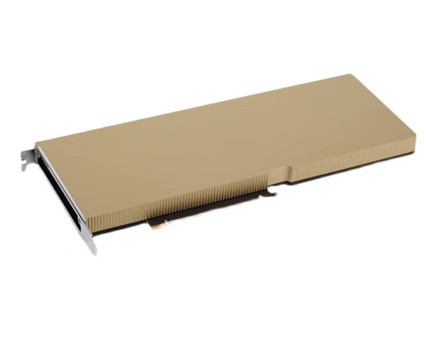

| Product Name | Tesla V100 GPU Accelerator | |

| Product Type | Graphic Card | |

| Technical Information | API Supported | OpenACC |

| OpenCL | ||

| DirectCompute | ||

| Processor & Chipset | Chipset Manufacturer | NVIDIA |

| Chipset Line | Tesla | |

| GPU Architecture | Volta | |

| Chipset Model | V100 | |

| Number Of GPUs | 1 | |

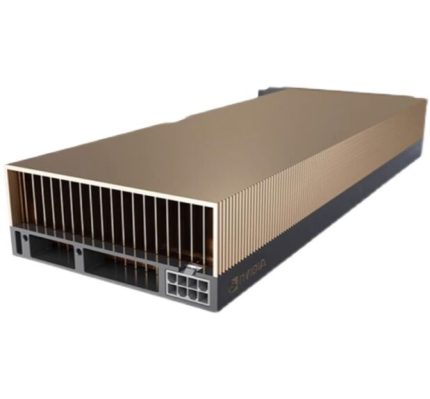

| Double-Precision Performance | 7 TFLOPS | |

| Single-Precision Performance | 14 TFLOPS | |

| Processor Cores | 640 Tensor | |

| 5120 CUDA |

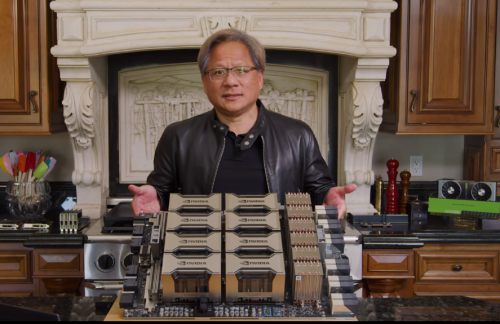

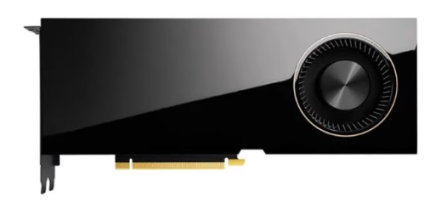

AI Training with Tesla V100

From recognizing speech to training virtual personal assistants and teaching autonomous cars to drive, data scientists are taking on increasingly complex challenges with AI. Solving these kinds of problems requires training deep learning models that are exponentially growing in complexity, in a practical amount of time.

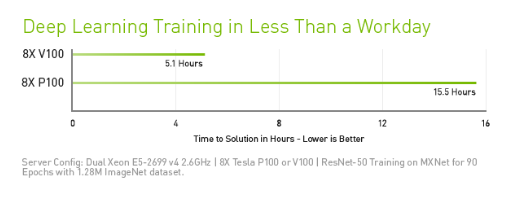

With 640 Tensor Cores, Tesla V100 is the world’s first GPU to break the 100 teraFLOPS (TFLOPS) barrier of deep learning performance. The next generation of NVIDIA NVLink™ connects multiple V100 GPUs at up to 300 GB/s to create the world’s most powerful computing servers. AI models that would consume weeks of computing resources on previous systems can now be trained in a few days. With this dramatic reduction in training time, a whole new world of problems will now be solvable with AI.

AI Inference with Tesla V100

To connect us with the most relevant information, services, and products, hyperscale companies have started to tap into AI. However, keeping up with user demand is a daunting challenge. For example, the world’s largest hyperscale company recently estimated that they would need to double their data center capacity if every user spent just three minutes a day using their speech recognition service.

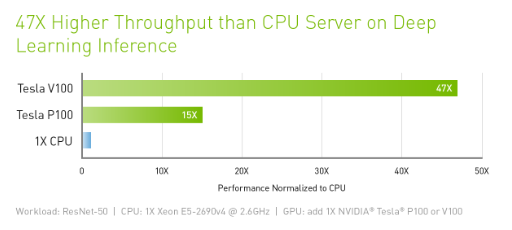

Tesla V100 is engineered to provide maximum performance in existing hyperscale server racks. With AI at its core, Tesla V100 GPU delivers 47X higher inference performance than a CPU server. This giant leap in throughput and efficiency will make the scale-out of AI services practical.

Tesla V100 Key Features for Deep Learning Training

Deep Learning is solving important scientific, enterprise, and consumer problems that seemed beyond our reach just a few years back. Every major deep learning framework is optimized for NVIDIA GPUs, enabling data scientists and researchers to leverage artificial intelligence for their work. When running deep learning training and inference frameworks, a data center with Tesla V100 GPUs can save up to 85% in server and infrastructure acquisition costs.

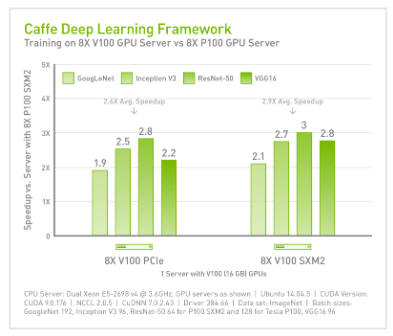

Caffe, TensorFlow, and CNTK are up to 3x faster with Tesla V100 compared to P100

100% of the top deep learning frameworks are GPU-accelerated

Up to 125 TFLOPS of TensorFlow operations

Up to 16 GB of memory capacity with up to 900 GB/s memory bandwidth

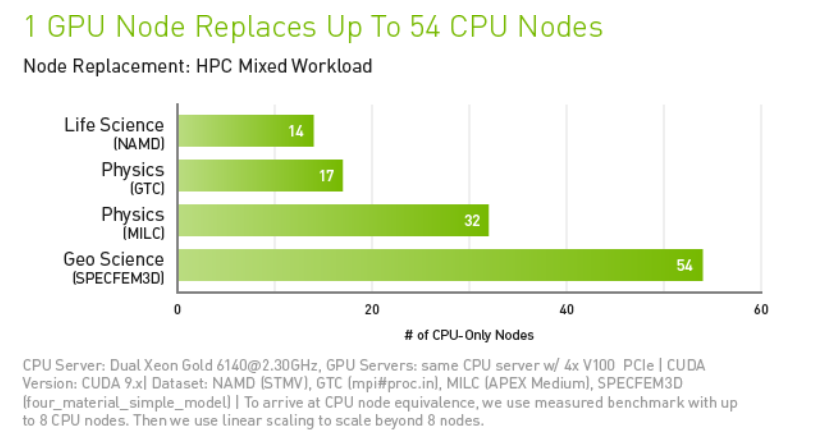

Accelerating High Performance Computing (HPC) with Tesla V100

To connect us with the most relevant information, services, and products, hyperscale companies have started to tap into AI. However, keeping up with user demand is a daunting challenge. For example, the world’s largest hyperscale company recently estimated that they would need to double their data center capacity if every user spent just three minutes a day using their speech recognition service.

Tesla V100 is engineered to provide maximum performance in existing hyperscale server racks. With AI at its core, Tesla V100 GPU delivers 47X higher inference performance than a CPU server. This giant leap in throughput and efficiency will make the scale-out of AI services practical.

Contact US :

Email: etestgroup@hotmail.com

Mobile Phone: +86-134 1017 1939

Skype: maryye214

Whatsapp / weChat: +86 13 1017 1939

QQ: 2501075324

Reviews

There are no reviews yet.