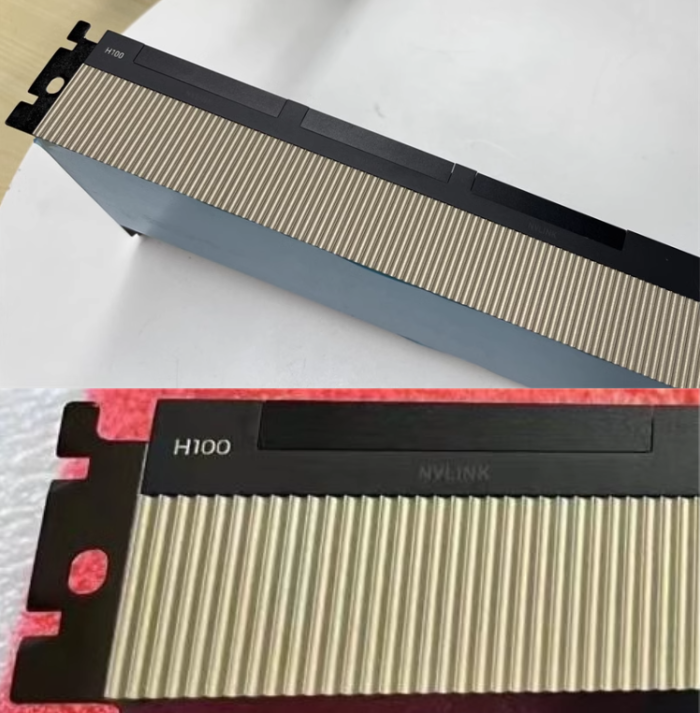

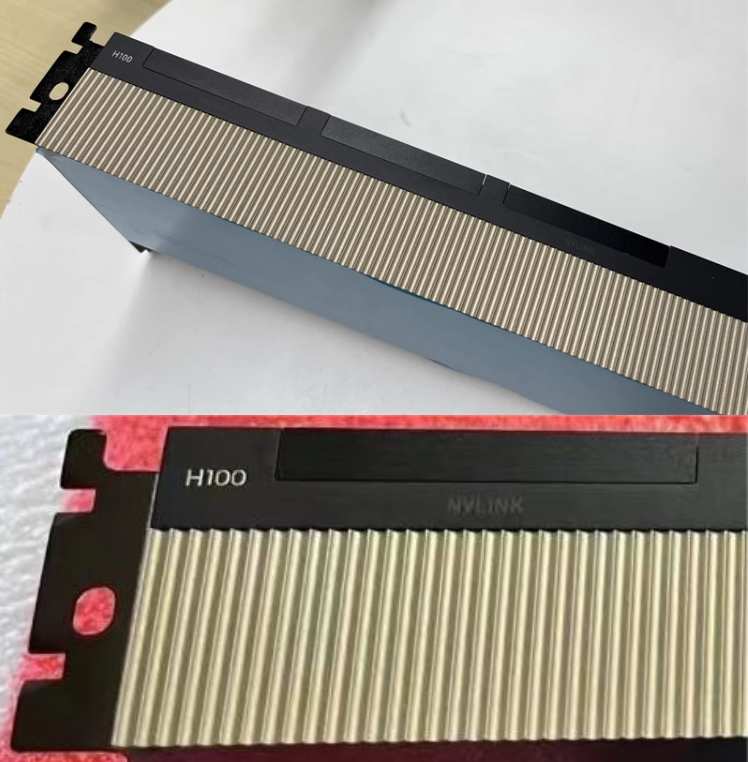

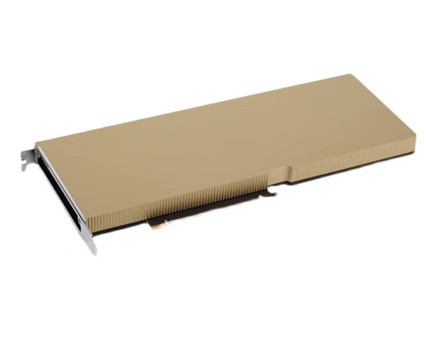

NVIDIA TESLA H100 80 GB Graphic Card – PCIe 900-21010-0000-000

An Order-of-Magnitude Leap for Accelerated Computing

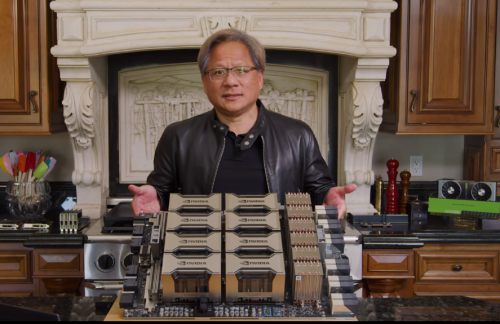

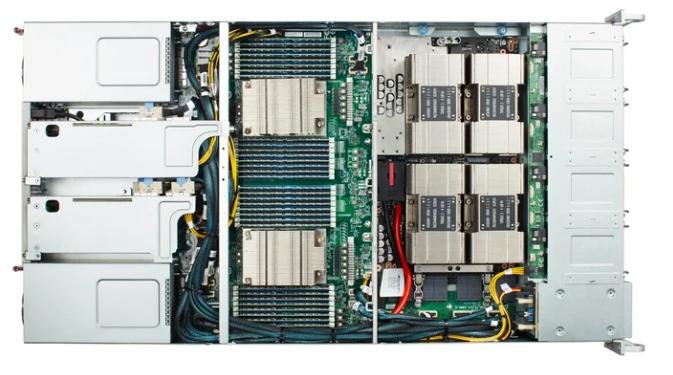

Tap into unprecedented performance, scalability, and security for every workload with the NVIDIA® H100 Tensor Core GPU. With the NVIDIA NVLink® Switch System, up to 256 H100 GPUs can be connected to accelerate exascale workloads. The GPU also includes a dedicated Transformer Engine to solve trillion-parameter language models. The H100’s combined technology innovations can speed up large language models (LLMs) by an incredible 30X over the previous generation to deliver industry-leading conversational AI.

Supercharge Large Language Model Inference

For LLMs up to 175 billion parameters, the PCIe-based H100 NVL with NVLink bridge utilizes Transformer Engine, NVLink, and 188GB HBM3 memory to provide optimum performance and easy scaling across any data center, bringing LLMs to mainstream. Servers equipped with H100 NVL GPUs increase GPT-175B model performance up to 12X over NVIDIA DGX™ A100 systems while maintaining low latency in power-constrained data center environments.

Ready for Enterprise AI?

Enterprise adoption of AI is now mainstream, and organizations need end-to-end, AI-ready infrastructure that will accelerate them into this new era.

NVIDIA H100 GPUs for mainstream servers come with a five-year subscription, including enterprise support, to the NVIDIA AI Enterprise software suite, simplifying AI adoption with the highest performance. This ensures organizations have access to the AI frameworks and tools they need to build H100-accelerated AI workflows such as AI chatbots, recommendation engines, vision AI, and more.

| Product Specifications: | |||

| Form Factor | H100 SXM | H100 PCIe | H100 NVL1 |

| FP64 | 34 teraFLOPS | 26 teraFLOPS | 68 teraFLOPs |

| FP64 Tensor Core | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPs |

| FP32 | 67 teraFLOPS | 51 teraFLOPS | 134 teraFLOPs |

| TF32 Tensor Core | 989 teraFLOPS2 | 756 teraFLOPS2 | 1,979 teraFLOPs2 |

| BFLOAT16 Tensor Core | 1,979 teraFLOPS2 | 1,513 teraFLOPS2 | 3,958 teraFLOPs2 |

| FP16 Tensor Core | 1,979 teraFLOPS2 | 1,513 teraFLOPS2 | 3,958 teraFLOPs2 |

| FP8 Tensor Core | 3,958 teraFLOPS2 | 3,026 teraFLOPS2 | 7,916 teraFLOPs2 |

| INT8 Tensor Core | 3,958 TOPS2 | 3,026 TOPS2 | 7,916 TOPS2 |

| GPU memory | 80GB | 80GB | 188GB |

| GPU memory bandwidth | 3.35TB/s | 2TB/s | 7.8TB/s3 |

| Decoders | 7 NVDEC | 7 NVDEC | 14 NVDEC |

| 7 JPEG | 7 JPEG | 14 JPEG | |

| Max thermal design power (TDP) | Up to 700W (configurable) | 300-350W (configurable) | 2x 350-400W |

| (configurable) | |||

| Multi-Instance GPUs | Up to 7 MIGS @ 10GB each | Up to 14 MIGS @ 12GB | |

| each | |||

| Form factor | SXM | PCIe | 2x PCIe |

| dual-slot air-cooled | dual-slot air-cooled | ||

| Interconnect | NVLink: 900GB/s PCIe Gen5: 128GB/s | NVLink: 600GB/s | NVLink: 600GB/s |

| PCIe Gen5: 128GB/s | PCIe Gen5: 128GB/s | ||

| Server options | NVIDIA HGX H100 Partner and NVIDIA-Certified Systems™ with 4 or 8 GPUs NVIDIA DGX H100 with 8 GPUs | Partner and | Partner and |

| NVIDIA-Certified Systems | NVIDIA-Certified Systems | ||

| with 1–8 GPUs | with 2-4 pairs | ||

| NVIDIA AI Enterprise | Add-on | Included | Included |

1. Preliminary specifications. May be subject to change. Specifications shown for 2x H100 NVL PCIe cards paired with NVLink Bridge.

2. With sparsity.

3. Aggregate HBM bandwidth.

Contact US :

Email: etestgroup@hotmail.com

Mobile Phone: +86-134 1017 1939

Skype: maryye214

Whatsapp / weChat: +86 13 1017 1939

QQ: 2501075324

Reviews

There are no reviews yet.